Thunderbolt Temperature Sensor

Re: [time-nuts] Thunderbolt performance vs temperature sensor

Mark Sims Fri, 06 Mar 2009 09:57:39 -0800

I did some testing on a Thunderbolt with the new revision E2 (low resolution, flat line) and old revision D1 (high resolution, curvy line) DS1620 temperature sensor chips. The only thing that changed was the temperature sensor chip.

Basically, I put a unit that had the new revision E2 temperature sensor into manual holdover mode and ran Lady Heather over several 24 hour (approximately) periods with the log enabled. Between each period, I put the unit back into GPS discipline mode and let it recover.

Next I swapped out the temperature sensor chip with an old revision D1 chip and let the unit run for a week so that it had a chance to relearn any filter coefficients. Then I repeated the holdover log runs.

I processed the logs to calculate the spread in the PPS error reading over 1 hour intervals. With the new revision E2, low res temp sensor the Thunderbolt averaged 1.73 uS of PPS change per hour. With the old revision D1, high res temp sensor the unit averaged 0.82 uS of PPS change per hour.

So, it appears that the Thunderbolt does indeed use the temperature sensor readings in its disciplining of the oscillator (which is also obvious from the plots of DAC voltage vs temperature) and that the units performance (at least the holdover performance) was adversely affected when the DS1620 temperature sensor chip was changed going from rev D to rev E.

Tom Van Baak Sat, 07 Mar 2009 20:16:16 -0800

Hi Mark,

This is very interesting work that you're doing with Thunderbolt DS1620 temperature sensors. I hope you stick with it. I agree with Said about the double bind idea.

I worry too that your TBolts are remembering something of the past in spite of the hardware changes you make for each new run. Do you do a full factory reset each time? And then let the unit “re-learn” for several hours, or maybe several days? Do we really know how or what it is learning and how that affects the response to temperature?

Having tried tempco measurements in the past I have several concerns about mythology. It's really hard to get this right and even harder when you don't know what “smart” algorithms are inside the box you're trying to test.

In this situation, it seems to me the main thing about temperature is not temperature at all, but the *rate* at which the temperature changes.

In that case, even careful cycling of room temperature every day or cycling temperature inside a special chamber every hour will not give you the real story – because in both cases the focus is on varying the temperature; not the rate at which the temperature is changing.

Compounding the problem is that different components in the system will react to temperature at different rates. The DS1620 is plastic and may react quickly. The OCXO is metal and will react slowly. Who knows what additional component's tempco are relevant to the final 10 MHz output. Some may overreact at first and then settle down.

I guess in the ideal world you'd want to do a “sweep” where you go through several cycles of temperature extreme at rates varying from, say, one cycle per minute all the way up one cycle per day.

It seems to me you'd end up with some kind of spectrum, in which tempco is a function of temp-cycle-rate. Has anyone seen analysis like this?

For example, I'd guess that most GPSDO have low sensitivity to wild temperature cycles every second – because of its own thermal mass. And I bet most GPSDO have low sensitivity to wild temperature swings every few hours – because the OCXO easily handles slow changes like this well. It's for time scales in between those two that you either hit sweet spots or get very confused and react just opposite of what you should.

I'm thinking another testing approach is to varying the temperature somewhat randomly; with random temperature *amplitude* along with random temperature *rate*. Using this temperature input, and measured GPSDO phase or frequency output, you might be able to do some fancy math and come up with a transfer function that tells the whole story; correlation; gain and lag as a function of rate, or something like that. I'll do some reading on this, or perhaps someone on the list can fill in the details?

I say all of this – because of an accident in my lab today. Have a careful look at these preliminary plots and tell me what you think. It shows anything but a nice one-to-one positive linear relationship between ambient temperature and GPSDO output.

http://www.leapsecond.com/pages/tbolt-temp/

/tvb

Magnus Danielson Sun, 08 Mar 2009 09:03:40 -0700

Tom Van Baak skrev:

See: http://www.leapsecond.com/pages/tbolt-temp/

Hi Mark,

This is very interesting work that you're doing with Thunderbolt

DS1620 temperature sensors. I hope you stick with it. I agree

with Said about the double bind idea.

I worry too that your TBolts are remembering something of the

past in spite of the hardware changes you make for each new

run. Do you do a full factory reset each time? And then let the

unit “re-learn” for several hours, or maybe several days? Do

we really know how or what it is learning and how that affects

the response to temperature?

Having tried tempco measurements in the past I have several

concerns about mythology. It's really hard to get this right and

even harder when you don't know what “smart” algorithms are

inside the box you're trying to test.

The theory here is that we do have a smart algorithm. Under the assumption that we have a smart algorithm comes that we want to give that algorithm a decent chance by using the higher resolution variant of the DS1620.

This also makes testing more troublesome.

In this situation, it seems to me the main thing about temperature

is not temperature at all, but the *rate* at which the temperature

changes.

In that case, even careful cycling of room temperature every day

or cycling temperature inside a special chamber every hour will

not give you the real story – because in both cases the focus is

on varying the temperature; not the rate at which the temperature

is changing.

Compounding the problem is that different components in the

system will react to temperature at different rates. The DS1620

is plastic and may react quickly. The OCXO is metal and will

react slowly. Who knows what additional component's tempco

are relevant to the final 10 MHz output. Some may overreact

at first and then settle down.

True… but.

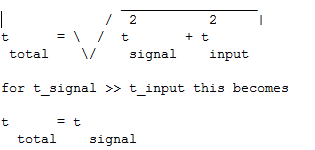

Consider that the OCXO and the temperature sensor has time-constants to them. You can now model their heat response-time through equalent RC links (in reality you have many of these lumped up, this is a thought model). As long as the temperature risetime is much slower than the OCXO and tempsensors time-constants, the risetime is dominated by the incident wave. A classic formula for this is:

Signal in this case is heatchanges due to our main heater 8 lighmin away.

Another thing I want to point out is that metal is a very good heat conductor where as plastic is a very poor heat conductor. One has to be carefull to confuse that with heat capacitivity, the ability to keep heat. We use metal flanges to remove heat from components, not plastic ones. The high conductivity of metal will make any temperature change move fairly fast throughout. This is why it is hard to solder on ground planes or metal-chassis, the heat at the solderpoint distributes quickly where as soldering on some local point on a PCB is not that hard as the plastic of the PCB is a relatively poor heat conductor.

So, you can expect that the metal can of the OCXO reacts fairly quickly and the temperature sensor is a thad slow. This also matches exercises we have done by using a fan to do forced air convection tests on OCXO and tempsensor. Putting a *plastic* hood over it significantly lowered the risetime and the consequence is that the lower risetime allowed the tempsensor inside the OCXO to sense the change and react to it. This introduces a third time constant, the time-constant of the OCXO control. The effect of using a hood or not was significant. The change of frequency shape was distinct and for the measurement interval we looked at the phase drift was about 1/3 for this simple addition.

Lessons learned:

* Temperature transients may expose OCXO time constants

* Passive isolation may aid in reducing gradient time constants

The OCXO Oven control really just helps with slow-rate changes and may span a high temperature range, but not quickly. Passive isolation helps smoothing out transients. For transients will heat capacitivity be an issue and multilayer isolation/capacitivity will acts as higher order filters.

I guess in the ideal world you'd want to do a “sweep” where you

go through several cycles of temperature extreme at rates varying

from, say, one cycle per minute all the way up one cycle per day.

It seems to me you'd end up with some kind of spectrum, in which

tempco is a function of temp-cycle-rate. Has anyone seen analysis

like this?

To some degree yes… see above.

For example, I'd guess that most GPSDO have low sensitivity to wild

temperature cycles every second – because of its own thermal mass.

Actually, if they sit in a forced air environment, they will change temperature pretty quick because their metal cover will conduct away the heat quite efficiently. Thermal mass is not a great measure unless you also consider the thermal conductivity, they together will describe the heat time constant.

If you put your GPSDO in a “heat pocket” and not put it flush to some metal surface but rather use plastic spacers you will be in a much better place from transients and the turbolence of convection (forced or not).

And I bet most GPSDO have low sensitivity to wild temperature swings

every few hours – because the OCXO easily handles slow changes

like this well. It's for time scales in between those two that you either

hit sweet spots or get very confused and react just opposite of what

you should.

You just don't react quick enough. Building OCXOs that would have a balance for it would turn up rather large. Our OSA 8600/8607 uses a Dewar flask embedded in foam inside the metal chassi and then has a metal chassi inside of that. The outer shell is not temperature controlled but becomes notably warm anyway.

Your wine-cooler 8607 should have a foam frapping and not stand metal-onto-metal to become more efficient.

Regardless. Temperature transients is best handled through isolation filters. Temperature gradients is reduces as well if done properly.

Unless an external temperature probe is thermally well connected to the OCXO it is not as useful and it can certainly not aid in anything but slow rate changes. This would become a TOCXO construct.

A thermally well tied temperature probe could be used for transient suppression if thinking about it, as the speed of compensation through the EFC input is very high. For it to work one has to model the transient response properly. Basically one has to process the signal through a model filter. This could be done in analogue or digital.

I'm thinking another testing approach is to varying the temperature

somewhat randomly; with random temperature *amplitude* along

with random temperature *rate*. Using this temperature input, and

measured GPSDO phase or frequency output, you might be able

to do some fancy math and come up with a transfer function that

tells the whole story; correlation; gain and lag as a function of rate,

or something like that. I'll do some reading on this, or perhaps

someone on the list can fill in the details?

This can be done using MLS sequences and the cross-correlation would produce the impulse response of the heat-transfer mechanism.

The actual heat difference used does not have to be that large actually, as you aim to establish the impulse response at this stage. With a detailed enough impulse response you can then locate the poles and zeros of the filter.

You can use a transistor and a resistor (say a 2N3055 with some suitable resistance) to be modulated by the MLS sequence and then do frequency or phase measurements synchronously with the MLS sequence. The rate of the MLS sequence will through Nyqvist theorem put an upper limit to the highest rate you can measure. Here is a golden case where “smart” frequency measurements should be avoided as their filtering would become included in the transient response. It could be compensated naturally, but would only confuse the issue.

The amplitude of temperature-shifts will of course become a frequency resolution factor. However, a short measurement period at high temperature shifts could work, but running over longer times natural temperature changes as well as aging needs to be suppressed and then you need to average over longer times and running at slightly less amplitude on modulation could be tolerated. Temperature changes and drift could be cancled from the measurement sequence prior to cross-correlation to reduce impact. This can be done through normal frequency and drift predicting and removal.

Use of MLS sequences to characterize impulse responses is a well established technique. The two major limitations is usually too low modulation rate and too short MLS sequence besides the obvious one of dynamic. Calibration of output and input stages can remove their impact.

I say all of this – because of an accident in my lab today. Have a

careful look at these preliminary plots and tell me what you think.

It shows anything but a nice one-to-one positive linear relationship

between ambient temperature and GPSDO output.

http://www.leapsecond.com/pages/tbolt-temp/

It looks kind of typical.

The OCXO performs a non-perfect diffrential task in the heat scale, its limited gain will make the long-term uncompensated. Look at the second plot. As the temperature rises drastically the phase dips dramatically. The oscillations display similar risetimes and thus is let through.

For me this is somewhat of an expected impulse response. The GPSDO attempts to steer it into action, so that why it does not look as the full integrated frequency response as one would expect from an OCXO free-wheeling.

Cheers, Magnus

SAIDJACK Fri, 06 Mar 2009 14:18:26 -0800

Hi,

While this is good news for Trimble's competition and may open up the avenue for an amateur mod, I think we would have to be fair to Trimble and do the double-blind test:

1) Put the original temp sensor back into the unit, let it run one week, and do the drift tests exactly the same as before.

2) remove the sensor completely from the board, and let it run without any sensor (if the unit works this way)

You may have seen the performance difference due to measurement errors, aging of the crystal, ambient temperature changes (there was over 1 week difference between the two tests, so I am sure ambient temperature effects were different on the two runs), etc.

To really get to the bottom of this, you would have to put the unit into a thermal chamber and cycle it from say -20C to +60C over an hour with say 10C steps and see the frequency change versus temperature.

bye, Said

Mark Sims Fri, 06 Mar 2009 16:04:01 -0800

Hello Said,

The Tbolt that I used for the test was well aged (several months of operation) and stable prior to the tests. It was only powered down for the 10 minutes or so that it took to swap out the old sensor.

I tried to choose data sets that were fairly comparable temperature wise. I also chose the basic measurement interval to be 1 hour so that temperature would not be changing much over the hour. I am fairly confident that the results reflect changes due to the temperature sensor.

I wanted to make the measurement PPS drift / degree C change / hour but the later model (low res) temp sensor chip would seldom produce a recordable change in temperature over a one hour period.

Mark Sims Sun, 08 Mar 2009 11:00:29 -0700

The test that I did was meant to reflect how a typical user would be using a Thunderbolt… sitting out in free air on a piece of poly foam, covered by a cardboard box to provide a little isolation from environmental transients. Tbolt internal temperature changes were around 3 deg C per day and 0.3C per hour. My chosen figure-of-merit for the tests was how much the PPS signal changed (as reported by the Thunderbolt message) over an hour.

The unit had run continuously for several months. Before I swapped the temp sensor chip, I made several runs where at 11:00 AM I put it into manual holdover mode and started the log. I recorded its self-measured data for around 23 hours, stopped the log, put it into normal operation mode for an hour.

Next, I shut it down and swapped the temp sensor chip. This took about 10 minutes. I then restarted it, let it stabilize for a day, and did a factory reset. I let it run for four days in GPS locked mode and four days with the 23 hour holdover mode cycles. This (hopefully) gave it some time to learn about the new temp sensor. Next I repeated the “official” 23 hour holdover test runs.

The average of the 1 hour PPS holdover deviations with the newer low res temp sensor was 1.73 uS per hour. The average of the 1 hour PPS deviations with the older high res sensor was 0.82 uS per hour.

Yes, it would be nice to put the unit into an environmental chamber and put it through its paces and discover all sorts of neat things about it. I was more interested in how the change in the DS1620 temperature sensor chip affected the units in a way that I might actually see in the way that I use them. I have already swapped the temp sensor chip three times in this unit (original E2 chip, another E2 chip, and the D1 chip) and don't really want to risk damaging it with two more swaps (back to E2 and then D1).

Tests on a single unit are hardly conclusive, but it appears that you can double your holdover performance by using the older DS1620 chip. Also, by looking at the DAC and TEMP plots the temp sensor chip readings ARE used in generating the DAC voltage in GPS locked mode… so I am assuming that the performance also would be improved in GPS locked mode (but by how much and how to test that have yet to be determined).